Apple's vision

The Vision Pro sets the stage for a new kind of computer

Welcome back to Multicore for Tuesday, June 6th.

The Apple Vision Pro is a computer.

This sounds obvious. The Apple Watch is a computer. The Meta Quest 2 is a computer, too. Heck, AirPods are computers. But the Apple Vision Pro is a computer.

With that revelation, everything about Apple's latest platform falls into place. It explains the high price, and it explains why Apple would push forward with the project at all. Apple is a computer company, and it believes that the Vision Pro's take on augmented reality is nothing short of a new computing paradigm.

Meta believes the same thing, too, and has put out solid products like the Quest 2. But just under a decade after buying Oculus in a bid to own that future, what Meta has to show for it today is a moderately popular VR gaming platform and some half-baked virtual meeting software in service of a "metaverse" concept that is yet to take any discernible shape.

Apple's take is that an AR headset can be your work computer, your TV, your communications portal, your everything. I have countless questions about the device and doubts about whether the platform will take off, but for the most part I thought this was a hugely impressive introduction, and one that could well pave a future path for Apple.

The Apple Vision Pro — thankfully not the "Reality Pro", as had been reported — is a VR headset with capable AR functionality that allows you to see your surrounding environment. It achieves this through a combination of cameras and high-resolution OLED microdisplays. You can block out the world around you, as is the default state for any VR headset, but you're also able to access a "passthrough" view of your surroundings at any time.

Throughout the much-rumoured run-up to this device's launch, I've mostly referred to it as a VR headset, because that's what it sounded like — and, really, what it is. Most existing VR headsets already offer a passthrough view of your environment, with generally shaky results. But I was surprised by the degree to which Apple is focusing on that aspect of the Vision Pro. By default, the UI is overlayed within the room that you’re in, which shows high confidence in the Vision Pro as an AR-first device.

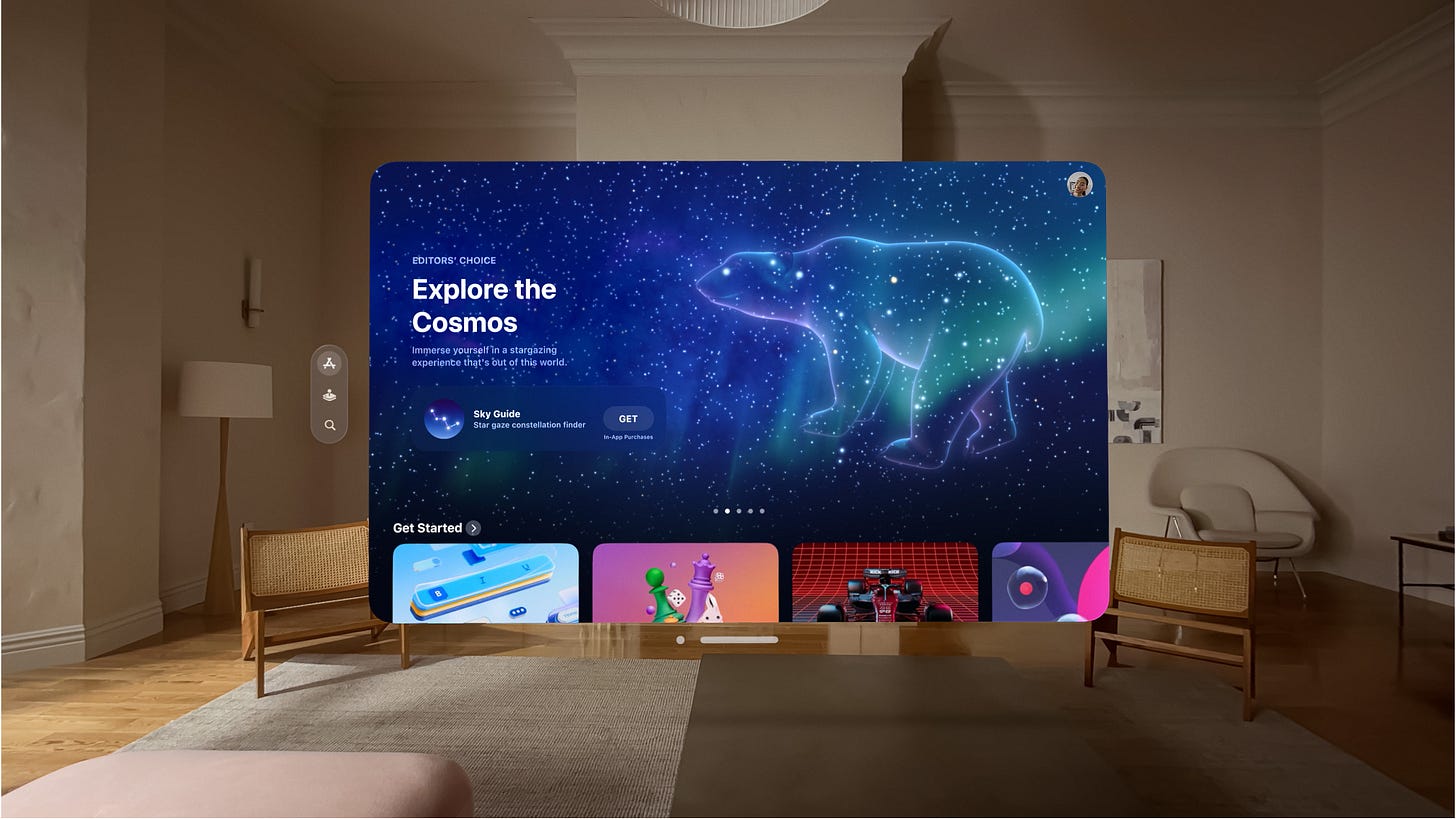

That said, outside of an introductory remark where Tim Cook called AR a "profound technology", Apple preferred to use another term to refer to the Vision Pro: "spatial computing". You put the headset on, and computing happens around you in your physical space. You might be working in an app that's floating in front of you with a browser window on the left and co-workers' video chat feeds on the right, for example. You're no longer limited by the physical constraints of your laptop or computer monitor.

The idea isn't new. I've been experimenting with this sort of setup ever since the first Oculus Rift and HTC Vive came out in 2016. I get the potential, but I know that so far the hardware and software really haven't been in place to make the most of it. Headset displays aren't sharp enough, and you have to use hacky (in a good way) apps like Virtual Desktop to make anything happen.

Apple's visionOS is an attempt to make it happen for real. As I expected, the user interface doesn't involve any physical controllers; it's controlled with your hands, voice, and eyes. The basic hand-tracking metaphors aren't all that different to what Meta has on its Quest headsets, with gestures like pinching your thumb and forefinger together to click and drag things around. It also makes heavy use of eye-tracking to highlight actionable objects as well as Siri voice commands.

The Vision Pro has Safari and Mail. Microsoft Office. Zoom. Adobe Lightroom, if you're okay with just having the iPad app float around in your living room. The sheer resolution of the OLED microdisplays should be a transformative leap over other VR headsets, allowing you to comfortably read text in apps or even from objects in the real world. You can pair keyboards, trackpads, and game controllers. You can even bring a macOS desktop right into visionOS by looking directly at your Mac, though it's unclear how I could get this to work with the Mac mini that powers Multicore from under a shelf.

In other words, this is at least theoretically a capable computer for getting work done. It won't be the easiest or the most efficient tool for a lot of jobs — Lord knows the iPad often isn't, even 13 years on — but Apple is explicitly positioning the Vision Pro as a general-purpose computing device. And that's important context for everything else it can do.